“Dude, you’ve crossed the threshold into big data now. It’s a moment where you have to swallow hard and denormalize.”

Tag Archives: geek

Sometimes it’s not so Good when People are Happy to see You

“There he is!” both my fellow engineers said as I walked into the office this morning. I knew right away that this was not going to be the Monday I expected it to be.

“The database is down,” my boss said before I reached my chair. “Totally dead.”

“Ah, shit,” I replied, dropping my backpack and logging in. I typed a few commands so my tools were pointing at the right place, then started to check the database cluster.

It looked fine, humming away quietly to itself.

I checked the services that allow our software running elsewhere to connect to the database. All the stuff we controlled looked perfectly normal. That wasn’t a surprise; it would take active intervention to break them.

Yet, from the outside, those services were not responding. Something was definitely wrong, but it was not something our group had any control over.

As I was poking and prodding at the system, my boss was speaking behind me. “Maybe you could document some troubleshooting tips?” he asked. “I got as far as the init command, then I had no idea what to do.”

“I can write down some basics,” I said, too wrapped up in my own troubleshooting world to be polite, “but it’s going to assume you have a basic working knowledge of the tools.” I’d been hinting for a while that maybe he should get up to speed on the stuff. Fortunately my boss finds politeness to be inferior to directness. He’s an engineer.

After several minutes confirming that there was nothing I could do, I sent an urgent email to the list where the keepers of the infrastructure communicate. “All our systems are broken!” I said.

Someone else jumped in with more info, including the fact that he had detected the problem long before, while I was commuting. Apparently it had not occurred to him to notify anyone.

The problem affected a lot of people. There was much hurt. I can’t believe that while I was traveling to work and the system went to hell, no one else had bothered to mention it in the regular communication channels, from either the consumer or provider side.

After a while, things worked again, then stopped working, and finally started working for realsies. Eight hours after the problem started, and seven hours after it was formally recognized, the “it’s fixed” message came out, but by then we had been operating normally for several hours.

By moving our stuff to systems run by others, we made an assumption that those others are experts at running systems, and they could run things well enough that we could turn our own efforts into making new services. It’s an economically parsimonious idea.

But those systems have to work. When they don’t, I’m the one that gets the stink-eye in my department. Or the all-too-happy greeting.

WordPress Geekery: Reversing the Order of Posts in Specific Categories

Most blogs show their most recent posts at the top. It’s a sensible thing to do; readers want to see what’s new. Muddled Ramblings and Half-Baked Ideas mostly works that way, but there are a couple of exceptions. There are two bits of serial fiction that should be read beginning-to-hypothetical-end.

A while back I set up custom page templates for those two stories. I made a WordPress category for each one, and essentially copied the default template and added query_posts($query_string.'&order=ASC'); and hey-presto the two fiction-based archive pages looked just right.

Until infinite scrolling came along. Infinite scrolling is a neat little feature that means that more episodes load up automagically as you scroll down the page. Unfortunately, the infinite scrolling code knew noting about the custom page template with its tweaked query.

The infinite scrolling is provided by a big, “official” package of WordPress enhancements called Jetpack. The module did have a setting to reverse the order for the entire site, but that was definitely a non-starter. I dug through the code and the Jetpack documentation and found just the place to apply my custom code, a filter called infinite_scroll_query_args. The example even showed changing the sort order.

I dug some more to figure out how to tell which requests needed the order changed, and implemented my code.

It didn’t work. I could demonstrate that my code was being called and that I was changing the query argument properly, but it had no effect on the return data. Grrr.

There is another hook, in WordPress itself, that is called before any query to get posts is executed. It’s a blunt instrument for a case like this, where I only need to change behavior in very specific circumstances (ajax call from the infinite scrolling javascript for specific category archive pages), but I decided to give it a try.

Success! It is now possible to read all episodes of Feeding the Eels and Allison in Animeland from top to bottom, the way God intended.

I could put some sort of of settings UI on this and share it with the world, but I’m not going to. But if you came here with the same problem I had, here’s some code, free for your use:

/** * reverses the order of posts for listed categories, specifically for infinite Jetpack scrolling */ function muddled_reverse_category_post_order_pre_get_posts( $query ) { if (isset($_REQUEST['query_args']['category_name'])) { $ascCategories = [ 'feeding-the-eels', 'allison-in-animeland', ]; if (in_array($_REQUEST['query_args']['category_name'], $ascCategories)) { $query->set( 'order', 'ASC' ); } } } add_filter( 'pre_get_posts', 'muddled_reverse_category_post_order_pre_get_posts' ); |

In the meantime, feel free to try the silly serial fiction! It will NOT change your life!

Feeding the Eels

Allison in Animeland

Esoteric Programming Languages

Most readers of this blog are probably familiar, at least by name, with some of the more common programming languages out there. This blog is brought to you courtesy of PHP, and then there are the seminal C and FORTRAN (after all these years, still king of the number-crunchers), the infamous COBOL, well-structured PASCAL, ground-breaking SmallTalk, Sun’s heavily-marketed Java and Microsoft’s counterploy C#, and newcomers like Ruby and Javascript.

There are a lot of computer languages. While there are some pretty striking differences between the above, they all have two things in common. They all involve controlling a computer by writing lines of code, and they were all invented to be useful.

There is another category of language that is not burdened by that second attribute. Useful? Pf. Not bound by the constraints of utility, the whole ‘lines of code’ thing often ceases to apply as well. These non-utile outliers fall under the general category of “esoteric language”, sometimes shortened (but not by me) to esolang.

While I have long been peripherally aware of this category of languages, in a small email discussion recently a friend of mine mentioned the language Brainfuck (sometimes written b****fuck to avoid offending people). Another member of the discussion linked to an amusing top-ten list of odd languages. I read the article and my brain started fizzing.

A programming language that is written as a musical score? Blocks of color that simultaneously convey quantity and program flow*? A program specifically designed to be as difficult as possible to write code in?

Some might ask, “why would anyone bother with such useless languages?” I don’t have an answer to that. I could go on about Befunge and the wire-cross theorem, or about Turing Completeness, but at the end of the day, I think it’s the same answer as one might give to the question “why does one bother writing poetry, when prose is so much clearer?”

Some of the languages are just for fun. INTERCAL (Compiler Language with No Pronounceable Acronym) set out with only one goal: to not be like any other language. For instance, since all coders have long been taught to avoid the GO TO command, INTERCAL instead uses COME FROM. And every now and then you have to say PLEASE. You don’t have to be a geek (but it helps) to enjoy this article about INTERCAL and Befunge (includes a Befunge program to generate Mandelbrot sets).

One can only read about esoteric languages for so long before one must scratch the itch and find a new way to write “Hello, World!” on a screen. I became intrigued by Befunge, a language that is not written as lines of code at all, but as a grid that a pointer moves around on, steered by >,<,^, and v characters. The program appealed to me for two key reasons: the characters the program cursor encounters can mean different things depending on which direction the cursor is moving at the time, and, even better, you can have the program alter itself by changing the characters in the playfield. So, here’s my Hello, World! in Befunge:

095*0 v 0*59 <

This 'Hello World' program is written in Befunge, a

language invented expressly to be as difficult to compile

as possible.

In fact, with this code, control flow goes straight through

this block of text and uses the p in 'program' as an instruction.

+

v g0*59 < 1

> "H",^

>:0-!|

v <

> "e",^

>:1-!|

v <

> "0"+292*p > "l",^

> :6*9- :9`!|

>:2-!| > $ ^

v <

> 7"0"+273*p "o",^

>:4-!|

v <

> " ",^

>:5-!|

v <

> "W",^

>:6-!|

v <

> "r",^

>:8-!|

v <

> "d",^

>:52*-!|

v <

v<

"

>:65+- !|

"

v "error!"$ <

>:#,_ v

restore the code to the starting state:

> "2"292*p "4"273*p @

Of course, it was absolutely required that the code alter itself at least once, and occasionally have the same symbol mean different things. This program is based on a counter, and when the counter is 0, it outputs an ‘H’. When the counter is at 1, out comes an ‘e’. ‘l’ is a little more complicated; after it matches the 2, the program does a little algebra (6x-9) to come up with 3, with which it then overwrites the 2 in the code. That way, on the next loop, when the counter is 3, we get another ‘l’, and the same equation replaces the 3 with a 9, ready for the ‘l’ in ‘World’.

In the language, ! means ‘not’. At the very bottom, this code evaluates the ! as ‘not’, which causes the program to loop around and pass through “!” (with quotes), turning what had been a program instruction into the exclamation point at the end of “Hello, World!” A nice little exclamation point on my program, as well.

I have now gone back and added an error message should something go wrong, but of course you have to alter the code to make an error actually happen, things being deterministic and whatnot.

You can watch the program execute in Super Slow-Mo with this Javascript Befunge interpreter. It’s kind of fun to watch the Instruction Pointer zip around the code. Paste the above code into the top box, click ‘show’, then click ‘slow’ below. I recommend setting the time to 50ms rather than the default 500.

Wooo! Big fun! While this language is nearly useless for actually accomplishing anything, I like the way you can make your code physically resemble the problem you are solving. A coworker mentioned writing a tic-tac-toe program with Befunge, and I immediately thought of building a program that is a grid of nine segments and the program alters the paths through the grid as moves are made. It would be awesome.

If I went back to college and majored in computer science, I could get credit for writing it.

In my wanderings, there was one program that completely blew my mind. You remember Brainfuck? There are only eight commands in that language, which makes it relatively simple to write an interpreter. An interpreter is a program that reads the code and executes the instructions as it encounters them, translating them from the (allegedly) human-friendly programming language into machine-friendly instructions for that particular processor. (Compilers do all the translating at once, before the program is executed, while interpreters do it on the fly.)

So here, ladies and gentlemen, is a Brainfuck interpreter, written in another esoteric language, Piet:

(courtesy Danger Mouse — if I’m not mistaken, the inventor of Piet)

That picture right there? That’s a program, written in a Turing-complete language, that implements another Turing-complete language. (**)8-O (the sound you heard was my head exploding.)

______

* In Piet, you can write a program to calculate pi that is a circle.

How to Make a Geek Happy

I once explained in great detail why HTML is the worst thing that ever happened to the Internet. In that episode I was a bit disingenuous — I also snuck in flaws with the protocol that delivers most of that HTML rubbish to your computer: HTTP.

Finally, finally, twenty years later than necessary, the tools are available to make Web applications work like all the other apps on your computer. (If you’re willing to set down your browser, World of Warcraft and its predecessors have been doing this for a long time now. But finally we can have good application design through the browser as well.)

While the primary benefit of this revolution is for the engineers making the apps (whom you as a user have to pay eventually), there are tangible benefits for Joe Websurfer as well. Mainly, things will work better and be snappier. You will curse at your browser about 30% less. (That number brought to you courtesy of the dark place I pulled it from.)

I work in a blissful world where my stuff doesn’t have to work on older browsers, and especially not on Internet Explorer. That means what might be ‘bleeding edge’ for most Web developers is merely ‘leading edge’ for me. I’m starting a new Web application, and it won’t use HTTP. It won’t even use AJAX.

Quick description of HTTP:

Your browser asks the server for something. The server gives it to you, then forgets you ever existed. This is especially crazy when you want your connection to be secure (https), because you have to negotiate encryption keys every damn time. That’s huge overhead when all you want is the user’s middle initial.

And what if something changes on the server that the page showing in the browser should know about? Tough shit, pal. Unless the browser specifically asks for updates, it will never know. Say that item in your shopping cart isn’t available anymore — someone else snapped up the last one. You won’t know your order is obsolete until you hit the ‘check out’ button. The server cannot send messages to the page running in your browser when conditions warrant.

Lots of work has gone into mitigating what a pain in the ass that all is, but the most obvious solution is don’t do it that way. Keep your encrypted connection open, have each side listening for messages from the other, and off you go. The security layer in my new app is so much simpler (and therefore sturdier) that I’m going to save days of development. (Those days saved will go straight to the bottom line at my company, since I’m an operating expense. The effect of my app will also go straight to the bottom line, as I save other people time and energy. Better yet, the people who will be made more efficient are dedicated to making the company more efficient. Those days of development time saved go through three stages of gain. Shareholders, rejoice.)

So, that makes me happy. Web Sockets, event-driven servers, a chance to create the Missing Middleware to make the tools out there fly. Bindings over the wire.

Of course, I’m not building it all from scratch; I’m using and improving tools created by those who have gone before me into this ‘software working right’ revolution. It means picking up a whole toolbox at one time, from database to server to client library to extensions of all of the above. There are times while I’m trying to put it all together that it feels like my head is going to explode. In a good way.

But boy, the difference a good book can make. In technical writing, there are two kinds of documentation: tutorial and reference. Mostly I gravitate toward reference materials: I have a specific question and I want to get a specific answer. References are raw information, organized to allow you to get to the nugget you need. Tutorials are training documents; they take you through a sequence to help you build complete understanding of a system.

There are many technical documents that try to be both, or don’t know which they are. We call those docs “shitty”. Then there are videos. SPARE ME THE FUCKING VIDEOS. Videos as a reference: completely worthless; videos as a tutorial: rarely adequate – what was that again?

(I’d be interested to hear from my formally-trainied tech writer pals about my above assertions.)

Anyway, On the client side I’m using a library called Backbone, and on top of that Marionette. I like them, but I was starting to get lost in the weeds. The reference material is pretty good, but getting an overall understanding of how the pieces worked together was slow and frustrating. Too many new ideas at once.

So I found a book endorsed by The Guy Who Made Marionette (yeah, The Guy. One guy, having a huge impact on the next generation of Internet applications. Could have been anyone, but there had to be The Guy.) that not only puts the pieces together, but introduces best practices and the reasoning behind them along the way. It may well be the best tutorial-style documentation I’ve run across in this industry. So, hats off for Backbone.Marionette.js: A Gentle Introduction. This book really helped me get my ducks in a row. My fastest learning curve since Big Nerd Ranch oh so very long ago.

So all that makes me a pretty happy geek. Lots to learn, Web applications built right, a new project with lots of creative freedom. And while I’m coming up to speed on the new tools, I already see gaps — the tools are young — including a potentially ground-breaking idea, that I will get to explore.

Can you believe they pay me to do this?

The Wanting and the Having

The other day I went online to learn more about neutral-density filters for photography. The idea is that sometimes there’s too much light, and to manage the light you can crank your lens down, limiting your creativity, or you can essentially give your camera sunglasses. I came across an article by an Australian bloke (rhymes with ‘guy’) who liked to use very very dark filters (“Black Glass”, he called them), to take super-sweet photos of running streams and things like that, using exposures that lasted minutes. A side effect of the technique is colors that reach out of the picture and slap your face.

I was distracted from my original goal of looking at less extreme ND filters, and found myself looking at cameras. The leap from filter to new camera is tenuous at best, but by God I made it.

The day before I had been looking at lenses that were pretty cheap with pretty impressive performance numbers. The catch: they were manual focus. My camera doesn’t have the parts that older cameras have to help a photographer tweak the focus. Auto-focus is so good these days that the extra cost of adding a split prism or whatever just won’t resonate with the typical purchaser.

But there’s another way to get good focus with old-fashioned lenses or practically opaque optics. Live View. Crappy little digicams have had live view for a while now, but for reasons I won’t belabor here, high-end SLRs have only recently gained this power. What it means is this: you can see the picture you’re about to take on the screen on the back of the camera. You can zoom in on the image, choose your favorite eyelash, and adjust the focus until it’s perfect.

My camera, snazzy though it is, doesn’t have Live View. For almost the same reasons, it doesn’t shoot video.

So I started pining for Live View. I have a lens that can really benefit from manual focus, and I plan to substitute pinholes for black glass. The Want took root in my soul.

Flashback: Several years ago, during year zero of the Muddled Age, I was sleeping on my cousin’s sofa in Bozeman, Montana. Let me tell you, there are things to photograph up there. I borrowed his gear for a visit to Yellowstone, got exciting results, and Cousin John set me up with my own rig while I basked in the euphoria of good beer and a few nice photos.

John is a Canon man, and he likes his toys. He filled up a shopping cart at B&H, I said “go”, and shortly thereafter I had a DSLR and three lenses. It’s worth noting that although he likes the high-end stuff, the camera he chose was entry level (though I couldn’t even tell that at the time). The main investment was in the glass, and those lenses have determined my course ever since.

I told anyone who would listen that the Canon 10D was more camera than I would ever need. Perhaps the Gods chortled. The 10D was all the camera I needed for many years, but through a series of events that could not be anticipated but must be appreciated, I began taking a lot of pictures, and I started to feel limited by my camera. No one was more surprised than I was, and no one was more pleased.

My upgrade was a big jump: 10D to a used 5D I bought off a coworker. Canon’s wacky numbering system goes 10-20-30-40-50-7-6-5-1. I think there’s a 60, too. Not all those numbers are available anymore. Anyway, I went 10D to 5D and it was a huge jump, and, gratifyingly, my pictures improved. I wasn’t just buying gear for the bragging rights.

Back to now: Honestly, I’m not feeling that limited by the 5D. It can take a pretty picture or two. But the 5D mark II has way more pixels, and Live View, and video. The person who sold me her 5D had just bought a Mark II. Now there’s the Mark III, out last spring to great excitement. It is a ridiculously awesome piece of consumer electronics. The biggest improvement over the Mark II: it’s much better in low light.

I started following Mark II’s on ebay. They are still manufactured, largely because people who have studio lights don’t need mark III’s most compelling feature. I have lights. I’m getting better at using them. Mark II is enough. Mark II is enough. Mark II is enough.

But you know what? I shoot other places than the studio. Sometimes I don’t control conditions and I have to adapt. A more versatile camera gives me more options. I spent ridiculous hours doing ‘research’, weighing the 5D Mark II, the 5D Mark III, and the brand-new 6D, which has some intriguing features. This research happened over the course of several nights after the light of my life had gone to bed, and resulted in what must have seemed a fiat to her: we need a Mark III. (Yes, “we”.) I haven’t hit the ceiling on the camera I have, and I was calling for a new one. A really expensive new one. I tried to play it cool, but inside I was a knot of commercial lust.

A funny thing happens when I relate this story to the folks around me: “At least you actually take pictures,” is the almost universal response. That I do, that I do. But I still feel something of the poseur when I indulge myself this way. I get good shots with the 5D I already own. It is for my own pride that I feel I have to develop my abilities to warrant carrying a prestige item like the Mark III. I can’t feel proud of owning it, that’s just a matter of spending money. That sucker’s in the mail now, and I better do something to justify holding it.

Also, I check the shipping status roughly every fifteen minutes. I’m giddier than a schoolgirl on free pony night.

The Coolest Weather Map Ever

Just take a look: the coolest weather map ever

Details of What?

An Online Community that I can Get Behind

Since there are others using my server now, I thought it would be a good idea to upgrade my backup practices. I looked around a bit, hoping for a solution that was free, butt-simple to set up, and automatic, so I would never have to think about it again. I don’t like thinking when I don’t have to.

I came across CrashPlan, the backup solution my employer uses. Turns out their software is free to chumps like me; they make their cash providing a place for you to put that valuable information.

There are two parts to any backup plan: you must gather your data together and you must put it somewhere safe that you can get to later. The CrashPlan software handles the gathering part, making it easy, for instance, to save all my stuff to the external hard drive sitting on my desk, but if the house burns down that won’t do me much good.

Happily CrashPlan also makes it easy to talk to remote computers, provided they have the software installed. I put CrashPlan on my server in a bunker somewhere in Nevada, and now this site and a couple of others are saved automatically to my drive in California as well. Easy peasy! Any computer signed up under my account can make backups to any other.

But wait! There’s more! The cool idea CrashPlan came up with was letting friends back each other up. I give you a special code and you can put backups of your stuff on my system. I can’t see what you saved, it’s all encrypted. But unless both our houses burn down at the same time, there’s always a safe copy.

Sure, if you pay you get more features and they will store your stuff in a safe place where you don’t have to wait if I happen to be on vacation, but for free that’s not bad at all. The idea of friends getting together and forming a backup community appeals to me as well. It’s a great way for geeks to look out for one another.

Step-by-Step LAMP server from scratch with MacPorts

Getting Apache, PHP, and MySQL installed and talking to each other is pretty simple — until something doesn’t come out right. This guide takes things one step at a time and checks each step along the way.

The good new is that because of the changes, you may not need this guide anymore, but I’ll update it when I get a chance to do a new install, just to keep my own head on straight.

Using MacPorts to build a LAMP server from scratch

About this tutorial:

There are other step-by-step guides out there, and some of them are pretty dang good. But I’ve never found one that I could go through and reach the promised land without a hitch. (Usually the hitches happen around MySQL.) Occasionally key points are glossed over, but I think mostly there are things that have changed, and the tutorials haven’t updated. Now however I’ve done this enough times that there are no hitches anymore for me. Since MacPorts occasionally changes things, I’ll put up at the top of this page the last time this recipe was last used exactly as written here.

This guide breaks things down into very small steps, but each step is simple. I include tests for each stage of the installation, so problems can be spotted while they're easy to trace. We get each piece working before moving on to the next. I spend a little time telling you what it is you accomplish with each step, because a little understanding can really help when it’s time to troubleshoot, and if things are slightly different you have a better chance of working through them.

Audience: This guide is designed to be useful to people with only a passing familiarity with the terminal. More sophisticated techno-geeks may just want to go through the sequence of commands, and read the surrounding material only when something doesn't make sense to them. The goal: follow these steps and it will work every damn time.

MacOS X versions: Tiger, Leopard, Snow Leopard, Lion. Maybe others, too. The beauty of this method is it doesn’t really matter which OS X version you have.

The advantages of this approach

There are multiple options for setting up a Mac running OS X to be a Web server. Many of the necessary tools are even built right in. Using the built-in stuff might be the way for you to go, but there are problems: It’s difficult to customize (Search on install apc Mac and you’ll see what I mean), you don’t control the versions of the software you install, and when you upgrade MacOS versions things could change out from under you.

Also simple to set up is MAMP, which is great for developing but not so much for deployment. For simple Web development on your local machine, it’s hard to beat.

But when it comes right down to it, for a production server you want control and you want predictability. For that, it’s best to install all the parts yourself in a known, well-documented configuration, that runs close to the metal. That’s where MacPorts comes in. Suddenly installing stuff gets a lot easier, and there’s plenty of documentation.

Holy schnikies! A new timing exploit on OpenSSL! It may be months before Apple's release fixes it. I want it sooner!

What you lose:

If you’re running OS X Server (suddenly an affordable option), you get some slick remote management tools. You’ll be saying goodbye to them if you take this route. In fact, you’ll be saying goodbye to all your friendly windows and checkboxes.

Also, I have never, ever, succeeded in setting up a mail server, MacPorts or otherwise, and I’ve tried a few different ways (all on the same box, so problems left over from one may have torpedoed the next), and no one I've ever met, even sophisticated IT guys, likes this chore. If serving mail is a requirement, then OS X Server is probably worth the loss of control. Just don’t ever upgrade your server to the next major version. (Where’s MySQL!?! Ahhhhhh! I hear frustrated sys admins shout.)

So, here we go!

Document conventions

There are commands you type, lines of code you put in files, and other code-like things. I've tried to make it all clear with text styles.

This is something you type into the terminal | Do not type the |

This is a line of code in a file. | Either you will be looking for a line like this, or adding a line like this. |

| This is a reference to a file or a path. |

Prepare the Box

- Turn off unneeded services on the server box. Open System Preferences and select Sharing.

- turn on remote login

- (optional) turn on Screen Sharing

- Turn off everything else - especially Web Sharing and File Sharing

- Install XCode. This provides tools that MacPorts uses to build the programs for your machine. You can get XCode for free from the app store. It’s a huge download. Note that after you download it, you have to run the installer. It may launch XCode when the install is done, but you can just Quit out of it. Lion and Mountain Lion require an extra step to install the command-line tools that MacPorts accesses.

- Install MacPorts. You can download the installer from http://www.MacPorts.org/install.php (make sure you choose the .dmg that matches the version of MacOS you are running). Run the installer and get ready to start typing.

- Now it’s time to make sure MacPorts itself is up-to-date. Open terminal and type

sudo port selfupdatepassword: <enter your admin password>

- If you're not familiar with

sudo, you will be soon. It gives you temporary permission to act as the root user for this machine. Every once in a while during this process you will need to type your admin password again. - May as well get into the habit of updating the installed software while we’re at it. Type

sudo port upgrade outdated

- Make a habit of running these commands regularly. One of the reasons you're doing this whole thing is to make sure your server stays up-to-date. This is how you do it.

Install Apache

- Now it’s time to get down to business. All the stuff we’ve installed so far is just setting up the tools to make the rest of the job easier. Let’s start with Apache!

sudo port install apache2

- This may take a little while. It’s actually downloading code and compiling a version of the server tailored to your system. First it figures out all the other little pieces Apache needs and makes sure they’re all installed correctly. Hop up and grab a sandwich, or, if you're really motivated, do something else productive while you wait.

- When the install is done, you will see a prompt to execute a command that will make Apache start up automatically when the computer is rebooted. Usually you will want to do this. The command has changed in the past, so be sure to check for the message in your terminal window. As of this writing, the command is:

sudo port load apache2

- Create an alias to the correct apachectl. apachectl is a utility that allows you to do things like restart Apache after you make changes. The thing is, the built-in Apache has its own apachectl. To avoid confusion, you can either type the full path to the new apachectl every time, or you can set up an alias. Aliases are commands you define. In this case you will define a new command that executes the proper apachectl.

- In your home directory (~/) you will find a file called .profile - if you didn’t have one before, MacPorts made one for you. Note the dot at the start. That makes the file invisible; Finder will not show it. In terminal you can see it by typing

ls -a ~/

- Edit ~/.profile and add the following line:

alias apache2ctl='sudo /opt/local/apache2/bin/apachectl'

- Edit how? See below for a brief discussion about editing text files and dealing with file permissions.

- ~/.profile isn't the only place you can put the alias, but it works.

- You need to reload the profile info for it to take effect in this terminal session.

source ~/.profile

- Now anywhere in the docs it says to use apachectl, just type apache2ctl instead, and you will be sure to be working on the correct server.

- In your home directory (~/) you will find a file called .profile - if you didn’t have one before, MacPorts made one for you. Note the dot at the start. That makes the file invisible; Finder will not show it. In terminal you can see it by typing

- Start Apache:

apache2ctl start

- Test the Apache installation. At this point, you should be able to go to http://127.0.0.1/ and see a simple message: “It works!”

- MILESTONE - Apache is up and running!

Install PHP

- Use MacPorts to build PHP 5:

sudo port install php56-apache2handler

- Time passes and I don't always update the php version number. At this moment the latest php version is 5.6, so you use php56 everywhere. If you want to install php 7.0, you would use php70. You get the idea.

- MacPorts will know it needs to install php56 before it can install the apache2 stuff.

- You could install the MySQL extensions to PHP now (

sudo port install php56-mysql), but that will cause MySQL to be installed as well. It’s no biggie, but I like to make sure each piece is working before moving on to the next. It makes problem-solving a lot easier. So, let’s hold off on that.

- Choose your php.ini file. There are a couple of different options that trade off security for convenience (error reporting and whatnot). As of this writing there is php.ini-development (more debugging information, less secure) and php.ini-production. Copy the one you want to use and name it php.ini:

sudo cp /opt/local/etc/php56/php.ini-development /opt/local/etc/php56/php.ini

- Test the PHP install

- On the command line, type

php56 -i

- A bunch of information will dump out. Hooray!

- On the command line, type

- Make your new php the default. This will allow you to run scripts from the command line just by typing 'php', rather than having to specify 'php56'.

- On the command line, type

sudo port select --set php php56

- Test this by typing

which php

You will see /opt/local/bin/php, which is where MacPorts keeps a pointer to the version you specified.

- On the command line, type

- Now it’s time to get Apache and PHP talking to each other. Apache needs to know that PHP is there, and when to use it. There’s a lot of less-than-ideal advice out there about how to do this.

- httpd.conf is the heart of the Apache configuration. Mess this up, Apache won’t run. It’s important, therefore, that you MAKE A BACKUP (there’s actually a spare copy in the install, but you never rely on that, do you?)

cd /opt/local/apache2/confsudo cp httpd.conf httpd.conf.backup

- First run a little utility installed with Apache that supposedly sets things up for you, but actually doesn’t do the whole job:

cd /opt/local/apache2/modulessudo /opt/local/apache2/bin/apxs -a -e -n php5 mod_php56.so

- The utility added the line in the Apache config file that tells it that the PHP module is available. It does not tell Apache when to use it. There is an extra little config file for that job, but it’s not loaded (as far as I can tell), and it’s not really right anyway. Let's take matters into our own hands.

- It won't let me save! See below for a brief discussion about editing text files and dealing with permissions.

- Time to edit! Open /opt/local/apache2/conf/httpd.conf with permission to edit it. We need to add three lines; one to tell it that PHP files are text files (not strictly necessary but let’s be rigorous here), and two lines to tell it what to do when it encounters a PHP file.

- Search for the phrase AddType in the file. After the comments (lines that start with #) add:

AddType text/html .php

- Search for AddHandler (it’s just a few lines down) and add:

AddHandler application/x-httpd-php .phpAddHandler application/x-httpd-php-source .phps

- Finally, we need to tell Apache that index.php is every bit as good as index.html. Search in the config file for index.html and you should find a line that says

DirectoryIndex index.html. Right after the html file put index.php:- Before:

DirectoryIndex index.html

- After:

DirectoryIndex index.html index.php

- Before:

- (Optional) As long as we’re in here, let’s make one more change for improved security. Search for the line that specifies the default options for Apache and remove Indexes:

- Before:

Options Indexes FollowSymLinks

- After:

Options FollowSymLinks

- Before:

- Save the file.

- Search for the phrase AddType in the file. After the comments (lines that start with #) add:

- Check the init file syntax by typing

/opt/local/apache2/bin/httpd -t

Syntax OKmessage. If there is an error, the file and line number should be listed. - Restart Apache:

apache2ctl restart

- httpd.conf is the heart of the Apache configuration. Mess this up, Apache won’t run. It’s important, therefore, that you MAKE A BACKUP (there’s actually a spare copy in the install, but you never rely on that, do you?)

- Test whether PHP and Apache can be friends. We will modify the “It Works!” file to dump out a bunch of info about your PHP installation.

- Currently the default Apache directory is /opt/local/apache2/htdocs

- Start by renaming index.html to index.php:

cd /opt/local/apache2/htdocssudo mv index.html index.php

- Edit the file, and after the It Works! bit add a PHP call so the result looks like this:

<html><body><h1>It works!</h1><?php echo phpinfo(); ?></body></html>

- Save the file

- Go to http://127.0.0.1 - you should see a huge dump of everything you wanted to know about your PHP but were afraid to ask.

- MILESTONE - Apache and PHP are installed and talking nice to each other.

Install and configure MySQL

- Use MacPorts to install MySQL database and server and start it automatically when the machine boots:

sudo port install mysql55-serversudo port load mysql55-server

- Now we get to the trickiest part of the whole operation. There's nothing here that's difficult, but I've spent hours going in circles before, and I'm here so you won't find yourself in that boat as well. MySQL requires some configuration before it can run at all, and it can be a huge bother figuring out what’s going on if it doesn’t work the first time. We start by running a little init script:

sudo -u _mysql /opt/local/lib/mysql55/bin/mysql_install_db

- As with Apache, you can create a set of aliases to simplify working with MySQL. There are some commands you will run frequently; things get easier if you don’t have to type the full path to the command every time. Open up ~/.profile again and add the following three lines:

alias mysqlstart='sudo /opt/local/share/mysql55/support-files/mysql.server start'alias mysql='/opt/local/lib/mysql55/bin/mysql'alias mysqladmin='/opt/local/lib/mysql55/bin/mysqladmin'- #TEMPORARY addition to the path so the next step will work

- export PATH=/opt/local/lib/mysql55/bin:$PATH

source ~/.profile

- Next we need to deal with making the database secure and setting the first all-important password. The most complete way to do this is running another utility that takes you through the decisions.

sudo /opt/local/lib/mysql55/bin/mysql_secure_installation

Remember the password you set for the root user!- You now have a MySQL account named root which is not the same as the root user for the machine itself. When using sudo you will use the machine root password (as you have been all along), but when invoking mysql or mysqladmin you will enter the password for the database root account.

- As with PHP above, MySQL has example config files for you to choose from. The config file can be placed in a bunch of different places, and depending on where you put it, it will override settings in other config files. If you follow this install procedure, you don’t actually need to do anything with the config files; we’ll just be using the factory defaults. But things will work better down the road if you choose a config that roughly matches the way the database will be used.

- Find where the basedir is. As of this writing it’s /opt/local, and that’s not likely to change anytime soon, but why take that for granted when we can find out for sure? Let's make a habit of finding facts when they're available instead of relying on recipes like this one.

mysqladmin -u root -p variablespassword: <enter MySQL root user's password>

- Now it’s time to choose which example config file you want to start with. The examples are in /opt/local/share/mysql55/support-files/, and each has a brief explanation at the top that says what circumstances it’s optimized for. You can read those, or just choose one based on the name. If you have no idea how big your database is going to be, medium sounds nice. You can always swap it out later.

sudo cp /opt/local/share/mysql55/support-files/my-medium.cnf <basedir>/my.cnf

- Find where the basedir is. As of this writing it’s /opt/local, and that’s not likely to change anytime soon, but why take that for granted when we can find out for sure? Let's make a habit of finding facts when they're available instead of relying on recipes like this one.

- On the command line, type

mysql -u root -ppassword: <enter MySQL root user's password>

exit

Teach PHP where to find MySQL

The database is up and running; now we need to give PHP the info it needs to access it. There's a thing called a socket that the two use to talk to each other. Like a lot of things in UNIX the socket looks like a file.

The default MySQL location for the socket is in /tmp, but MacPorts doesn’t play that way. There are a couple of reasons that /tmp is not an ideal place for the socket anyway, so we’ll do things the MacPorts way and tell PHP that the socket is not at the default location. To do this we edit /opt/local/etc/php56/php.ini.

There are three places where sockets are specified, and they all need to point to the correct place. Remember when you saved the socket variable from MySQL before? Copy that line and then search in your php.ini file for three places where is says

default_socket:pdo_mysql.default_socket = <paste here>. . .mysql.default_socket = <paste here>. . .mysqli.default_socket = <paste here>

In each case the

whatever =part will already be in the ini file; you just need to find each line and paste in the correct path.- While we’re editing the file, you may want to set a default time zone. This will alleviate hassles with date functions later.

- Finally, we need to install the PHP module that provides PHP with the code to operate on MySQL databases.

sudo port install php56-mysql

- Restart Apache:

apache2ctl restart

- Test the connection.

- Typing

php56 -i | grep -i 'mysql'

- Second test: The whole bag of marbles. You ready for this?

- In the Apache’s document root (where the index.php file you made before lives), create a new file named testmysql.php

- In the file, paste the following:

<?php$dbhost = 'localhost';$dbuser = 'root';$dbpass = 'MYSQL_ROOT_PASSWRD';$conn = mysqli_connect($dbhost, $dbuser, $dbpass);if ($conn) {echo 'CONNECT OK';} else {die ('Error connecting to mysql');}$dbname = 'mysql';mysqli_select_db($conn, $dbname);

- Edit the file to replace MYSQL_ROOT_PASSWRD with the password you set for the root database user.

- Save the file.

- In your browser, go to http://127.0.0.1/testmysql.php

- You should see a message saying “Connection OK”

- DELETE THE FILE. It's got your root password in it!

sudo rm /opt/local/apache2/htdocs/testmysql.php

- Typing

- MILESTONE - Apache, PHP, and MySQL are all working together. High-five yourself, bud! You are an IT God!

Set up virtual hosts.

Finally, we will set up virtual hosts. This allows your server to handle more than one domain name. Even if you don't think you need more than one domain, it's a safe bet that before long you'll be glad you took care of this ahead of time.

We will create a file that tells Apache how to decide which directory to use for what request. There is an example file already waiting for us, so it gets pretty easy.

- Tell Apache to use the vhosts file. To do this we make one last edit to httpd.conf. After this, all our tweaks will be in a separate file so we don’t have to risk accidentally messing something up in the master file.

- In /opt/local/apache2/conf/httpd.conf, find the line that says

#Include conf/extra/httpd-vhosts.conf

- The # told Apache to ignore the include command. Take a look at all those other files it doesn’t include by default. Some of them might come in handy someday...

- Save the file and restart Apache - the warnings you see will make sense soon.

- Test by going to your old friend http://127.0.0.1

- Forbidden! What the heck!?! Right now, that's actually OK. The vhosts file is pointing to a folder that doesn't exist and even if it did it would be off-limits. All we have to do is modify the vhosts file to point to a directory that actually does exist, and tell Apache it's OK to load files from there.

- In /opt/local/apache2/conf/httpd.conf, find the line that says

- Before going further, it's probably a good idea to figure out where you plan to put the files for your Web sites. I've taken to putting them in /www/<sitename>/public/ - not through any particular plan, but this gives me full control over permissions if I should ever need to chroot any of the sites. The Apple policy of putting sites in User can be useful, but really only if you plan to have one site per user. Otherwise permissions get tricky. The public part is so you can have other files associated with the site that are not reachable from the outside.

- Set up the default host directory

- Open /opt/local/apache2/conf/extra/httpd-vhosts.conf for editing.

You will see two example blocks for two different domains. Important to note that if Apache can’t match any of the domains listed, it will default to the first in the list. This may be an important consideration for thwarting mischief.

The examples provided in the file accomplish one of the two things we need to get done — they tell Apache what directory to use for each domain, but they do nothing to address what permissions Apache has in those directories. A lot of people put the permissions stuff in the main httpd.conf, but why not keep it all in one place and simplify maintenance while we reduce risk?

Here's an example:

<VirtualHost *:80>ServerAdmin you@your.email.comDocumentRoot "/www/sitename/public"ServerName sitename.comServerAlias www.sitename.comErrorLog "logs/sitename-error_log"CustomLog "logs/sitename-access_log" common<Directory "/www/sitename/public">Options FollowSymLinksAllowOverride NoneOrder allow,denyAllow from all</Directory></VirtualHost>

You can see where it sets what directory to go to, where it says to treat www.mydomain.com the same as mydomain.com, and then in the Directory block it sets permissions. The actual permissions instructions are pretty arcane. The most important thing to note is the line

AllowOverride none

Here's the skinny: A lot of web apps like WordPress and Drupal need to set special rules about how certain requests are handled. They use a file called .htaccess to set those rules. By setting

AllowOverride noneyou're telling Apache to ignore those files. Instead, you can put those rules right in the <Directory> blocks in your vhosts file. It saves Apache the trouble of searching for .htaccess files on every request, and it's a more difficult target for hackers. .htaccess is for people who don't control the server. You do control the server, so you can do better.- If others will be putting sites on the server and you don't want them fiddling with the config files, you can allow .htaccess to override specific parameters. Read up in the Apache docs to learn more.

- If you are using SSL, you also need to set up a VirtualHost entry for port 443. That entry will also include the locations of the SSL certificates.

- Add further blocks that match the domains you will be hosting.

- Restart Apache and test your setup. http://127.0.0.1 should go to your default directory. Testing the domains is trickier if you don’t have any DNS entries set up for that server. I’ll write up a separate document about using /etc/hosts to create local domains for this sort of test.

- MILESTONE - You have done it. A fully operational LAMP environment on your Mac, suitable for professional Web hosting.

(Optional) Install phpMyAdmin

phpMyAdmin makes some database operations much easier. There have been security issues in the past, so you might reconsider on a production machine, but on a development server it can be a real time saver.

sudo port install phpMyAdmin

- Update your Virtual Hosts with the domain you want to use to access phpMyAdmin, which is by default at /opt/local/www/phpmyadmin/

- test - log in as root.

- Configure - configuring phpMyAdmin fills me with a rage hotter than a thousand suns. It just never goes smoothly for me, whether I use their helper scripts or hand-roll it while poring over the docs. Maybe if I do it a few more times I’ll be ready to write a cookie-cutter guide for that, too. In the meantime, you’re better off getting advice on that one elsewhere.

Wrapping Up

I hope this guide was useful to you. I'm he kind of guy who learns by doing, and I've made plenty of mistakes in the past getting this stuff working. Funny thing is, when it goes smoothly, you wonder what the big deal was. Hopefully you're wondering that now.

If you find errors in this guide, please let me know. Things change and move, and I'd like this page to change and move with them.

Keep up to date: One of the big advantages of this install method is that updates to key software packages get to your server faster. Use that power. Run the update commands listed in step one regularly.

- The script that tests the PHP-MySQL connection is based on one I found at http://www.pinoytux.com/linux/tip-testing-your-phpmysql-connection

Appendices

Appendix 1: A brief explanation of sudo

In the UNIX world, access to every little thing is carefully controlled. There's only one user who can change anything they want, and that user is named root.

When you log in on a Mac, you're not root, and good thing, too. But as an administrator, you can temporarily assume the root role. You do this by preceding your command with sudo. (That's an oversimplification, and you will have earned another Geek Point when you understand why. In the meantime, just go with it. sudo gives you power.)

When you use sudo, you type your password and if the system recognizes you as an administrator it will let you be root for that command.

For convenience, you only have to type your password every five minutes, but you do need to repeat 'sudo' for each command.

Just remember, as root you can really mess things up.

Appendix 2: On editing text files and permissions

Jerry told me to edit the file, you lament, but he didn't say how. Kind of strange, considering the minute detail of the rest of the guide. The thing is, there's not one easy answer.

Let's start with the two kinds of text editors. There are editors like vim and pico that run right in terminal. They are powerful, really useful for editing files on a remote box, and if you know how to use them you're not reading this footnote. The other option is a windowed plain-text editor. TextEdit is NOT a plain-text editor. There are a lot of plain-text editors out there, and they all have their claims to fame. You can use any of them to edit these files.

Whoops! That brings us to the gotcha: permissions. In UNIX, who can change what is tightly controlled. Many of the files we need to edit are owned by root, the God of the Machine, so we need to get special permission to save our changes. Many of the plain-text editors out there will let you open the file, but when it comes time to save... they can't. You don't have permission.

Some editors handle this gracefully, however, and let you type your admin password and carry on. BBEdit and its (free) little brother TextWrangler give you a chance to type your password and save the file. I'm sure there are plenty of others that do as well.

BBEdit and TextWrangler also allow you to launch the editor from the command line, so where I say above edit ~/.profile, you can actually type edit ~/.profile and if you have TextWrangler installed, it will fire right up and you'll have taken care of the permissions issue. (If you decided to pay for BBEdit, the command is bbedit ~/.profile.) I'm sure there are plenty of other editors that do that too.

I'm really not endorsing BBEdit and TextWragler here; they just happen to be the tools I picked up first. Over time I have become comfortable with their (let's call them) quirks. Alas, finding your text editing answer is up to you. If you're starting down this path, it's only a matter of time before you pick up rudimentary vim or pico skills; eventually you'll be using your phone to tweak files while you're on the road. It's pretty empowering. But is now the time to start learning that stuff? Maybe not. It's your call.

A Visit From Steve

Steve Jobs came to visit me in a dream last night. He was a younger version with badly-bleached hair that turned out on the orange side. He was very animated as we discussed the best way to add advanced table features to Safari. Steve was as intense as people say he was when he was alive, and we got along great.

How This Blog Works

Over the years, the technology behind this blog has gone from cave-dwelling stone-knives-and-bearskin static pages to cloud-city jet-packs-and-lightsaber dynamic yumminess. That transformation starts with WordPress but does not end there. Not by a long shot.

I started the Muddled Media Empire using a tool called iBlog, because it was free and worked with Apple’s hosting service, which I was already paying for. iBlog’s claim to fame was that it didn’t require a database – every time you made a change it went through and regenerated all pages that were affected. Toward the end, that was getting to be thousands of pages in some cases, each of which had to be uploaded individually. When iBlog’s support and development faltered, it was already past time for me to move on.

WordPress is an enormously popular Web-publishing platform. It comes in two flavors: you can host your blog on their super-duper servers and accept their terms of service and the slightly limited customization options, or you can install the code on your own server and go nuts. I chose the latter, mainly because I wanted to be able to touch the code. I’m a tinkerer.

So I signed up for a cheap Web host and set to work building what you see now. At first things were great, but after a while the host started having issues, and the once-great customer service withered up and vanished. So much for LiveRack. I think they just didn’t want to be in the hosting business anymore. I moved to iPage.

iPage was cheap, but I was crammed onto a server with a bunch of other people and sometimes my blog would take an agonizing time to load. Like, almost a minute. Then there was the time a very popular Geek site linked to my CSS border-radius table and iPage shut me down because the demand on the server was too much. Ouch! My moment in the sun became my moment at the bottom of a well.

I set out to find ways to make this blog more server-friendly and more user-friendly at the same time. Step 1: caching. WordPress doesn’t store Web pages, it stores data and the instructions on how to build a Web page. So, every time you ask to load a page here, WordPress fires up a program that reads from the database and assembles all the parts to the page. The thing is, that takes longer than just finding the requested file and sending it back, the way iBlog did. Caching is a way for the server to say, “hey, wait a minute – I just did this page and nothing’s changed. I’ll just send the same thing I did last time.” That can lead to big savings, both in time and server load.

I looked at a few WordPress cacheing programs and eventually chose W3 Total Cache, because it does far more than just cache data. For instance, it will minify scripts and css files (remove extra spaces and crunch them down) and combine the files together so the browser only has to make one request. It will zip the data, meaning fewer 1’s and 0’s moving down the pipe, and it does a few other things as well, one of which I will get to shortly.

I installed W3 Total Cache, and although some settings broke a couple of javascripts (for reasons I have yet to figure out – I’ll get to that someday), the features I could turn on definitely made a difference. Hooray!

But Muddled Ramblings and Half-Baked Ideas was still way too slow. I continued my search for ways to speed things up. I also began a search for a host that sucked less than iPage. (iPage was also starting to have outages that lasted a day or more. Not acceptable.) I decided I was willing to pay extra to be sure I wasn’t on an overwhelmed machine.

I’m not sure which came first – new server or Amazon Simple Storage Service. S3 is a pretty basic concept – you put your stuff on their super-duper servers, and when people need it they will get it really quickly. Things that don’t change, like images and even some scripts, can live there and your server doesn’t have to worry about them.

This is where W3 Total Cache earned my donation to their cause. You see, you can sign up for Amazon S3, and then put your account info into the proper W3TC panel and Bob’s Your Uncle. W3TC goes through your site, finds images and whatnot, puts them in your S3 bucket, and automatically changes all the links in your Web pages to point to your bucket instead of your own server. (Sometimes I find I have to copy the image to my S3 bucket manually, but that’s a small price to pay.)

Now a lot of the stuff on my blog, like the picture of me with the Utahraptors the other day, sits on a different, high-performance server out there somewhere, and no matter how overwhelmed my server happens to be at the moment those parts will arrive to you lickety-split. Amazon S3 is not free, however – each month I get an invoice for two or three cents. Should Muddled Ramblings suddenly become wildly popular, that number would increase.

About that server – the next stop on my quest for a good host was a place called Green Geeks. I wanted to upgrade to a VPS, which means I get a dedicated slice of a server that acted just like it was my very own machine. There is a lot to like about those, but my blog just wouldn’t run in the base level of RAM they offered. I upgraded and reorganized so that different requests would not take up more ram than they needed. Still, I had outages. Sometimes the server would just stop freeing up memory and eventually choke and die. Since it was a virtual server in a standard configuration, logic says it was caused by something I was doing, but all my efforts to figure it out were fruitless, and Green Geeks ran out of patience trying to help me figure it out.

The server software itself is Apache. At this point I considered using nginx (rhymes with ‘bingin’ ex’) instead. It’s supposedly faster, lighter, and easier to configure. But, I already know Apache. I may move to nginx in the future, but it’s not urgent anymore.

During the GreenGeeks era I came across another service that improves the performance of Web sites while reducing the load on the servers. I recently wrote glowingly about CloudFlare, but I will repeat myself a bit here for completeness. CloudFlare is a service that has a network of servers all over the world, and they stand between you the viewer and my server. They stash bits of my site all around the world, and much of the time they will have a copy of what you need on hand, and won’t even need to trouble my server with a request. About half of all requests to muddledramblings.com are magically and speedily taken care of without troubling my server at all. They also block a couple thousand bogus requests to my server each day, so I don’t have to deal with them (or pay for the bandwidth). It’s sweet, and the base service is free.

Unfortunately, it was not enough to keep my GreenGeeks server from crashing. Once more I began a search for a new host. I found through word of mouth a place called macminicolo. Apple employees get a discount, but I wasn’t an Apple employee yet. It was still a bargain. For what turned out to be the same monthly cost of sharing part of a machine at GreenGeeks, I get an entire server, all to myself, with plenty of RAM. I’ve set up several servers on Mac using MacPorts, and I knew just how to get things up and running well. It costs less than half what a co-located server costs anywhere else I have found (Mac, Windows, or Linux). (Co-location has up-front costs, but in the long term saves money.) So I have that going for me.

The only thing missing is that at GreenGeeks I had a fancy control panel that made it much simpler to share the machine with my friends. I do miss that, but I’m ready now to host friend and family sites at a very reasonable cost.

So there you have it! This is just your typical Apache/WordPress/W3 Total Cache/Amazon S3/CloudFlare site run off a Mac mini located somewhere in Nevada. Load times are less than 5% of what they were a year ago. Five percent! Conservatively. Typically it’s more like 1/50th of the load time. Traffic is up. Life is good.

Now I have no incentive at all to learn more about optimization.

Must be a Very Long Train

I’m traveling to beautiful scenic Lawrence, Kansas this summer, and I thought I’d see if taking the train was an option. On the plus side, the Southwest Limited passes right through town; if I flew I’d have to arrange transport from Kansas City. On the minus side, traveling by rail in this country is pricey. Back on the plus side, a stop in Santa Fe for a few days is trivial – the train goes through Lamy.

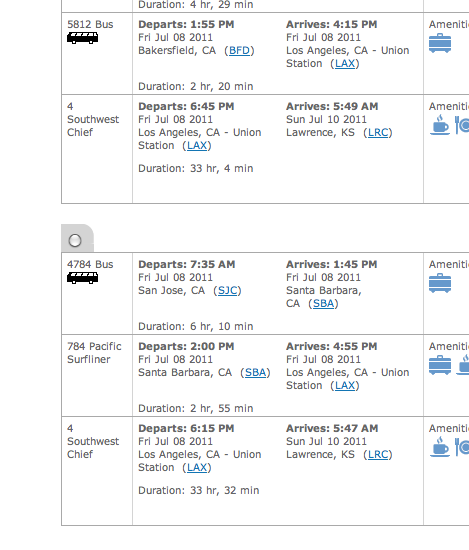

As I perused my options I came upon this table:

Note that, depending on how I reach Los Angeles, the Southwest Chief departs at different times. The back end of the train catches up with the front end over the course of the journey; the arrival time is almost the same.

Sometimes a movie maker will see a shot in a film and have to ask, “how did they do that?” Most of the time, a question like that is a compliment. But here I am, a Web/database guy, asking, “how did they do that?” and it’s with a disbelieving shake of the head. Who on this planet would design a system that allowed such inconsistency? Trust me, it takes extra work to get system behavior like that.

Don’t tell the people signing my time sheets every week, but this stuff is not that hard.

Securing Dropbox

As I mentioned recently, Dropbox is awesome. When using it, however, it’s important to think about security. The dropbox guys lock up your data nice and tight – but they hold the keys.

Think of it this way: You’re on a cruise ship, and you have a priceless diamond tiara (don’t we all?). You know it’ll be much safer in the ship’s vault than in your cabin. The ship’s purser is only too happy to watch over your valuables in their very strong safe. Now you can rest easy.

Except… there’s someone besides you who can open the vault. What if the government serves the purser with a warrant (or some other constitutionally-questionable writ) and takes your tiara? What if someone fools the purser into handing over your tiara? For most things, trusting the purser is fine, but that tiara is really something special. What you need, then, is a special box with a really strong lock. You give the purser the box and neither he nor anyone else can even see what’s inside, and you can make it a really strong box, so even if the purser hands over the keys to his vault, your stuff is still safe.

The same principle applies with Dropbox. It’s really convenient and pretty darn secure, but someone else is holding the keys. For most things, like my writing, no further security is necessary. Yet I have a few files that I don’t want to leave to someone else to protect, but I still want the convenience and data backup Dropbox provides. On my mac I’ve set up a very simple system that allows me to see my most secret files whenever I need to on any of my machines, but protects them from prying eyes. It’s actually pretty simple, and there’s almost certainly a direct analog on Windows.

The disk utility that comes with Macs can create an encrypted disk image using pretty dang strong encryption. If you put that image file in your dropbox, then any files you add to that virtual disk will encrypted and saved to your Dropbox when you unmount the disk. Here are the steps:

- Fire up Disk Utility (it’s in the Utilities folder).

- Click New Image

- Decisions, decisions….

- Name your new disk. If you name it “secret stuff” that will just make people curious.

- Size: For reasons I’ll go into shortly, I’d advise not making this any bigger than you really need. If you’re protecting text files, it can be pretty small. The 100MB setting is probably more than enough for most people.

- Format: Just use the default

- Encryption: I say, what the heck. Go for the maximum unless you’ll be using a really old machine.

- Partition: just use the default.

- Image Format: sparse disk image – this will keep the size of the actual disk file down. UPDATE – As of MacOS X 10.5, there’s a new option called “sparse bundle disk image”. DON’T USE THAT! It seems perfect at first (see below) but things get mucked up if there’s a conflict.

- Save. You will be asked for a password. You’ll not need to remember it, so make it good and strong, nothing like any password you’ve used anywhere else. Keep the “save in keychain” option selected. (If you need it later, you can find it with Keychain Access.) – Remember: this is the secret that protects all your other secrets.

- Voila! Put the disk image in your Dropbox folder. When you open the image file, a new hard drive will appear in finder. Anything you put on the drive will be added to the .dmg file you created.

- “Eject” the drive on that machine and open the .dmg on any other machines you want to share the information. While you remember your crazy password, get it saved in the keychains of your various machines.

A couple of notes:

- The .dmg file will only update when you “eject” the drive. So I advise you not keep it mounted most of the time. Open it, add/access the files inside, and close it again. If you open it on two machines at the same time, you will end up with two versions in your Dropbox folder.

- I advised saving your password on your keychain, but remember that anyone who can access your computer can also access your secrets. So you might want to consider not putting the password in your laptop’s keychain, for instance, if you think it might fall into the wrong hands.

- Since your secret files are saved as a single blob of data, you won’t have automatic backups of individual files. If you need to recover one, you’ll have to find the right version of the image file.

- Since your information is saved as a big ol’ blob, if you make a huge .dmg file it will eat up space in your Dropbox and burn up unnecessary bandwidth each time your save. ‘Sparse’ images only grow to the maximum as you use the space (but never shrink unless you intervene with Disk Utility).

- UPDATE – Apple has created a new format that saves the image file as a whole bunch of little blobs, rather than one big one. With that option, when you make changes, only the little blobs that changed need to get updated. This was to make Time Machine work better, and at first I thought it would be perfect for Dropbox. Then I spent a few minutes testing and discovered that the way Dropbox handles conflicts (two computers updating the file at the same time) gets royally hosed when you use this format. Bummer. So, don’t use it.

- It’s possible to set things up to protect individual files, but it’s complicated. Hopefully it won’t always be.

- Important! If you only store the password on one machine – Save it somewhere else also!. If you lose that password (if your hard drive crashes or your computer is stolen, for instance), you’re not getting into your strongbox. Ever. That was the whole point, after all.

Excel 2011 for Mac, UNIX Time, and Visual Basic for Applications

Note to people looking for a formula: Yes, the code is here (for Mac and Windows, even). I tend to go long-winded even in technical articles, but if you’re dealing with converting UNIX time to Excel time, the answers lie below. You can skip ahead or read my brilliant and entertaining *cough* analysis first.

Microsoft Excel uses a method to represent time that is both smart and frustrating. How do they manage this? They take a good engineering solution, then fiddle with it. First some background:

Long before Y2K people who knew what they were doing had already abandoned the practice of using strings of text to represent dates in a computer. Using strings like “3/10/2011” to represent a date has plenty of drawbacks, from cultural (is that March 10th or October 3rd?) to performance-related (sort 3/10/2011, 4/2/1902, 3/8/2012). Therefore long ago people who were smarter than I am came up with other ways to represent time. Happliy, time is nice and linear. All you really need is a number line. Remember them? A number line stretches from zero to infinity in both directions. To measure time all you need to do is decide on a zero point and then any point in the history of the universe can be represented by some number of time units from that instant.

My first exposure to a more rational way to measure time was in the old MacOS. I don’t remember anymore exactly when the zero point was, or even what the units chosen were. I do remember that the number gets too big for the computer to handle some time in 2014. Ancient Macs will have a problem then. I blame the Aztecs.

The UNIX boys count seconds from January 1, 1970 at 00:00. You get special Geek Cred if you went to a party to celebrate second 1234567890 of the Unix epoch. 32-bit computers that use the Unix epoch will break in 2038, when the number of seconds gets too big to fit in 32 bits. (Note also that you can only go back a finite distance before the negative number goes out of the range the processor can handle.

That’s all well and good, but I’m here to discuss Microsoft Excel today, and in particular Microsoft Excel for Mac. Excel counts in days, but allows fractional values. 12.5 represents noon twelve days after the zero point. I haven’t checked, but I think this system works for dates far, far into the future. So good on Microsoft for coming up with it. (As long as you don’t need dates before the zero time. In Excel, those are just strings again).

Of course, there are a couple of caveats. First: the historical oddity. In Excel, the day February 29, 1900 exists. Alas, there never was such a day. Microsoft included this error because they wanted to be compatible with Lotus, who simply messed up. To change it now would cause problems, because the zero point for the Microsoft time is January 1, 1900. Every date in every spreadsheet would suddenly be off by one. A thousand years from now we may still be calculating time based on the insertion of a bogus day.

Oh, except that Microsoft time doesn’t always start in 1900, and here’s where things start to get squirrely. If you’re using Excel for Mac, the default day zero is January 1, 1904, so the bogus day vanishes (no negative dates in Excel, remember?). Mac Excel dates aren’t burdened by the bogus day. Except when they are. More on that in a bit.

I descended into Excel recently to write a macro that does fancy formatting based on data I dump from a Web-based tracking tool I’m building. The dates in my data are based on the UNIX epoch, so I need to convert them. I dump the raw data into one sheet and then display it correctly converted and formatted on the main sheet that people actually look at. Here’s the code I use in a cell of the spreadsheet that needs to show a converted date:

=DATE(1970,1,1)+import!Z3/(60*60*24) |

where the unix time is in cell Z3 of the ‘import’ sheet. This divides the unix time by the number of seconds in a day, which gives me the number of days that have passed since the UNIX zero time. The formula then adds on the number of days from the spreadsheet zero to the UNIX zero time. (I could just say 86,400 instead of 60*60*24, but this way I can tell at a glance I’m dealing with days, and speed will not be an issue.) Happily, this formula will work on both Mac and Windows versions of Excel, because the DATE function will return the right value for the start of the unix epoch based on whichever version of Excel is running.

So, no problem, right?

Well… except. I also have some more fancy work to do that requires scripting. The good news: Mac Excel 2011 uses Visual Basic for Applications (VBA), which while imperfect is a zillion times better than AppleScript. So away I went, coding with a twinkle in my eye and a song in my heart. To convert dates, I naturally followed the same plan I did in the sheet’s cells: get the value for 1/1/1970, then add the unix epoch days.

And the dates came out different. Yep, when scripting, Excel always uses the Windows zero time, even when the spreadsheet in question uses the Mac zero time. Dates calculated in cells in the sheet are four years different than dates calculated using the same method in a script.

Aargh. Of course, once I realized what the problem was, it was not too difficult to work around it. I just lost some of the portability of my code, because now it has to be tweaked based on what the zero date of the spreadsheet is.

An aside here: If you’re here to resolve some date confusion in your own Mac spreadsheet, I strongly recommend you start by going to Preferences->Calculation->Workbook Options and uncheck the “use 1904 date” option. Unfortunately it will not recalculate the dates already entered in your sheets, so if that’s a problem then it’s too late for you, bucko. Read on.

Here’s some not-as-portable-as-it-could-be code. You need to choose one of the first two lines based on whether your sheet uses mac dates or windows dates:

'excelZeroDate = DateSerial(1904, 1, 1) ' mac zero excelZeroDate = 0 ' DateSerial(1900, 1, 1) ' windows zero unixZeroDate = DateDiff("d", excelZeroDate, DateSerial(1970, 1, 1)) |

Then if I have a date in the ‘import’ sheet to convert, I can write something like:

startDate = DateAdd("s", Worksheets("import").Cells(dateRow, dateColumn), unixZeroDate) |

The nice part is that these functions handled converting seconds and days for me. Overall it’s not a bad system if you overlook the part where a single application gives two different answers to the same question.